What you’ll learn

Introducing our comprehensive certificate program designed for risk and compliance professionals who need to manage a quality management system in compliance with the EU AI Act. This program combines four essential courses to provide a deep dive into the critical aspects of AI risk management and regulatory compliance.

EU AI Act Compliance for High-Risk AI Systems: Gain a thorough understanding of the EU AI Act, focusing on the identification of high-risk AI systems, obligations for developers, strategies for implementing requirements, and achieving conformity through assessments.

Algorithmic Risk & Impact Assessments: Learn to assess the risks and impacts of AI algorithms, ensuring that potential harms are identified and mitigated effectively. This course covers methodologies for conducting assessments and strategies for managing algorithmic risks.

AI Governance & Risk Management: Explore the principles of AI governance and the best practices for managing risks associated with AI systems. This course provides insights into establishing robust governance frameworks and risk management strategies to ensure responsible AI deployment.

Bias, Accuracy, & the Statistics of AI Testing: Delve into the critical issues of bias and accuracy in AI systems, understanding the statistical methods for testing and validating AI models. This course equips participants with the knowledge to address bias and ensure the accuracy of AI systems.

Students will also be added to our community slack channel, where they can connect with other students, directly reach BABL AI staff for help, and get access to additional resources including weekly live Q&A sessions with our CEO and instructor Dr. Shea Brown.

Upon completing this certificate program, participants will be equipped with the skills and knowledge to navigate the complexities of AI risk management and regulatory compliance. They will be prepared to implement and oversee quality management systems that adhere to the EU AI Act, ensuring the ethical and safe deployment of AI technologies. Join this program to become a proficient risk and compliance professional in the rapidly evolving field of AI.

Click the “Learn More” button to download our “EU AI Act: Quality Management System Oversight Certification Handbook” and learn all there is to know about this program.

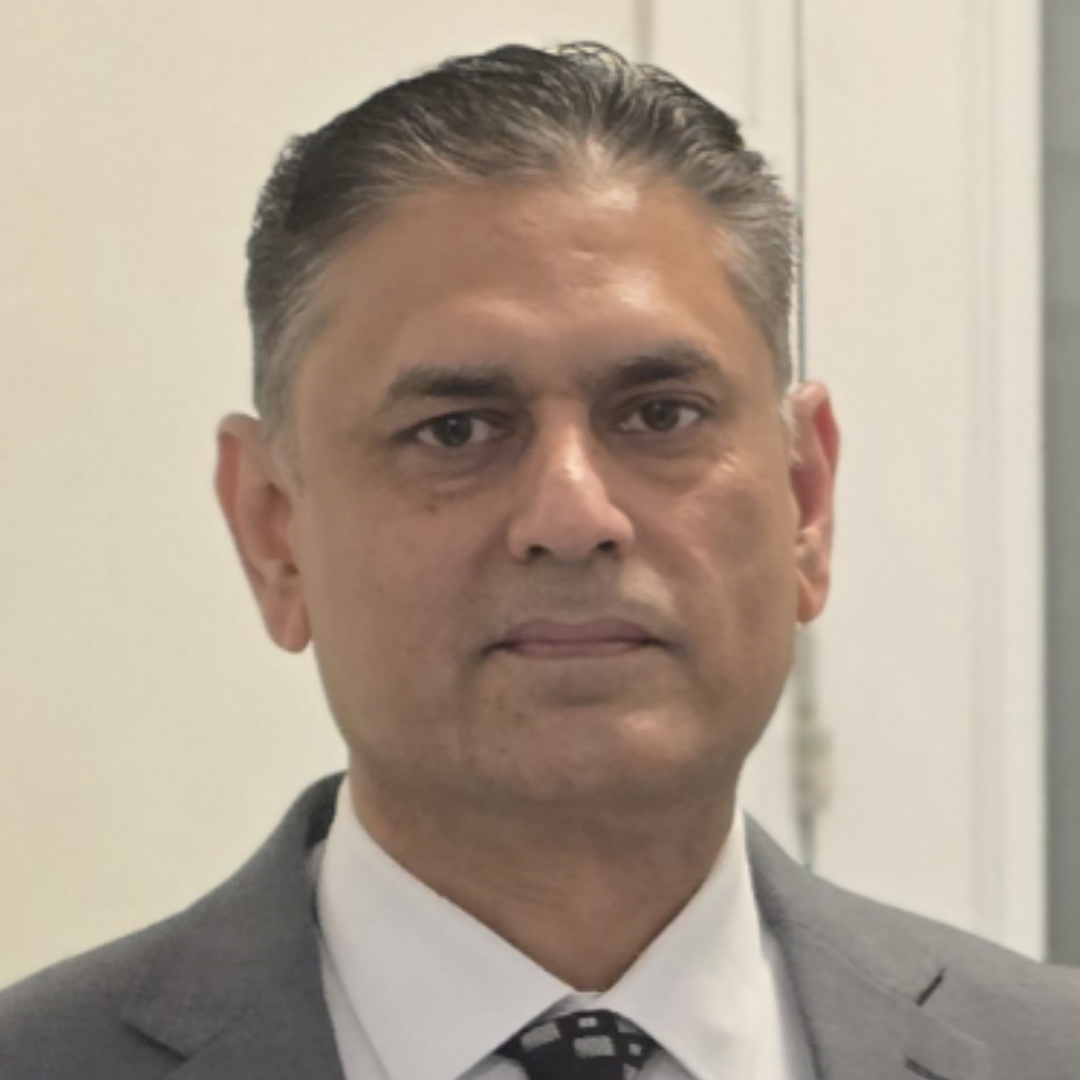

About the Instructor

The instructor of this program is Founder and CEO of BABL AI, Dr. Shea Brown.

Shea is an internationally recognized leader in the space of AI and algorithm auditing, bias in machine learning, AI governance and Responsible AI. He has testified and advised on numerous AI regulations across the US and the EU.

He is a Fellow and Board Member at ForHumanity, a non-profit working to set standards for algorithm auditing and organizational governance of artificial intelligence.

He is also a founding member of the International Association

of Algorithmic Auditors, a community of practice that aims to advance and organize the algorithmic auditing profession, promote AI auditing standards, certify best practices and contribute to the emergence of Responsible AI.

Shea also has a PhD in Astrophysics from the University of Minnesota and, until recently, was a faculty member in the Department of Physics & Astronomy at the University of Iowa, where he had been recognized for his teaching excellence from the College of Liberal Arts & Sciences.

Don’t just take our word for it

Choose a Pricing Option

EU AI Act - Quality Management System Certification

Full Package of 4 Courses

Content included in this certification

Additional qualifying discounts are available

Contact us today to learn more